Computational Creativity as Meta Search

Computational creativity is the art, science, philosophy and engineering of computational systems which, by taking on particular responsibilities, exhibit behaviors that unbiased observers would deem to be creative.

Machine learning (ML) has shown promise for many creative tasks such as music generation, image and painting generation, image style transfer, etc. In particular it has shown great success on creative tasks with large datasets. Computational creativity techniques that use machine learning train on a corpus of existing examples and learn a function that can be used to approximate the examples. While modern ML approaches have shown some ability to generalize, they are largely designed to produce output close to a set of exemplars. That is: mimicry. This feature is an inherent limitation of modern machine learning methods when applied to creative aesthetic domains where one might expect greater novelty.

But Human Creativity is More Complex

Human creativity appears more complex that just mimicry. Learning is still an important part of creativity. But as an individual learns the skills to create in a particular medium, the individual transitions through several stages of creative activity:

- Stage 1: mimicry — re-creating something the individual admires.

- Stage 2: exploration —as one gains experience, one can progressively deviate from known examples and explore a space nearby to known examples while largely obeying established conventions of a genre.

- Stage 3: redefinition — intentionally violating the conventions of a genre with the goal of inventing a new genre.

Stages 2 and 3 share a common trait: human creativity in these stages is intentional and goal-based. While skill learning and experience are important factors in human creativity, humans also seem to be able to intentionally manipulate their internal models to produce output no one has ever seen. For example, one can ask what could be created if a constraint is relaxed, some parameter is increased on decreased, something is added that is not normally there, or two value are combined in various ways.

This is very different from most ML based computational creativity. Instead of learning a model and sampling from a distribution over possible outputs, humans appear to learn a variety of knowledge and creatively recombine this knowledge to search through possibility spaces. In other words: by intentional manipulation of models, one is able to explore new models for which there might never have been training data, query those models to generate interesting and novel artifacts, and iteratively manipulate those models if necessary.

Making ML Creativity More Like Human Creativity

How do we make ML-based computational creativity algorithms look more like human creativity? Our proposal is to search through the space of hypothetical generative models, some of which exist or can exist and some of which cannot exist because they cannot be trained with existing data. If we can find those generative models and sample from them, then we can generate things that cannot otherwise be generated.

Why does this matter? Machine learning requires data. To create a truly novel thing one cannot first collect a large data set of examples of that thing. Consider the problem of generating computer games. We can generate new levels and maps for games because we can train on existing levels and maps. We can learn to recreate the mechanics of the game. But if I have an idea in mind for a different game that is unlike other existing games (stage 2 creativity), it doesn’t make sense for me to first design levels and write code for the game engine in order to train a system to make the game you’ve already made.

Consider Generative Adversarial Networks (GANs), one of the most commonly used techniques for automatically generating images. If one wants to generate a face of a person who never existed, one first collects thousands of pictures of faces of people who have existed. If one wants to generate a picture of an eagle, one must collect thousands of pictures of eagles. If one wants to generate a picture of a Pegasus, one must… but… what if there aren’t many photorealistic pictures of Pegasi? However, it is likely that one could train generative models of horses and birds. The recombination demonstrated by human creators suggests that an algorithm could be devised to explore all the ways of combining those two generative models until it finds one that generates a plausible-looking creature with the features of horses and birds.

We propose a novel approach to machine learning based computational creativity, inspired by human creativity: combinational meta search. Combinational meta search is an approach to computational creativity that uses search over the space of hypothetical models.

Computational Creativity Background

There is a long history of computational creativity. I won’t attempt a review. One general approach to computational creativity is Combinational creativity. Combinational creativity refers to creativity that takes the form of a combinational process joining familiar ideas in an unfamiliar way. There exist a number of formalized techniques for achieving combinational creativity including concept blending, amalgamation, and compositional adaption. At a high level these techniques allow the recombination of items from within a knowledge base, while retaining some of the structure from the parent items.

To illustrate concept blending, amalgamation, and compositional adaptation, consider different ways to combine the concepts of house and a boat. Assume we have a model of a house and a boat. For simplicity, the representation we will use for illustration encodes each concept as a graph and in terms of their uses and locations. The figure below (left) shows our models of house (input 1) and boat (input 2).

Concept blends merge elements that are similar to one another according to the knowledge base, but can include non-merged elements as well, thus leading to the “Houseboat” blend. Amalgams incorporate as much information as possible from both input spaces without merging, instead choosing between sufficiently similar elements. Compositional adaption breaks apart the individual elements of each input and composes novel concepts piece-by-piece, which allows it to generate smaller, novel concepts. The output concepts illustrated in Figure above (right) are a single output of many possible combinations from each technique.

Combinational creativity techniques cannot create new knowledge out of thin air, they require a knowledge base of concepts or cases. This knowledge base is typically encoded by domain experts. Machine learning based computational creativity can be thought of as first learning this knowledge base from data combinational meta-search introduces three new aspects

- The models being combined are generative, meaning they are paired with algorithms that can produce creative artifacts;

- Instead of manipulating models using a single, given combinational algorithm (concept blending, amalgams, compositional adaptation), we introduce a search that tries all combination algorithms and inspects what they are capable of generating;

- Combinational algorithms can be chained to expand the space of possible models to those for which there may have never been training data.

Combinational Meta Search

Combinational meta search is an optimization search over the space of all possible models for the class of artifact desired. Borrowing ideas from classical optimization search we can envision this space as a landscape where each point is a hypothetical generative model of varying degrees of desirability. The operators that allow the search algorithm to move around the space are the combinational algorithms enumerated above: concept blending, amalgams, and compositional adaptation.

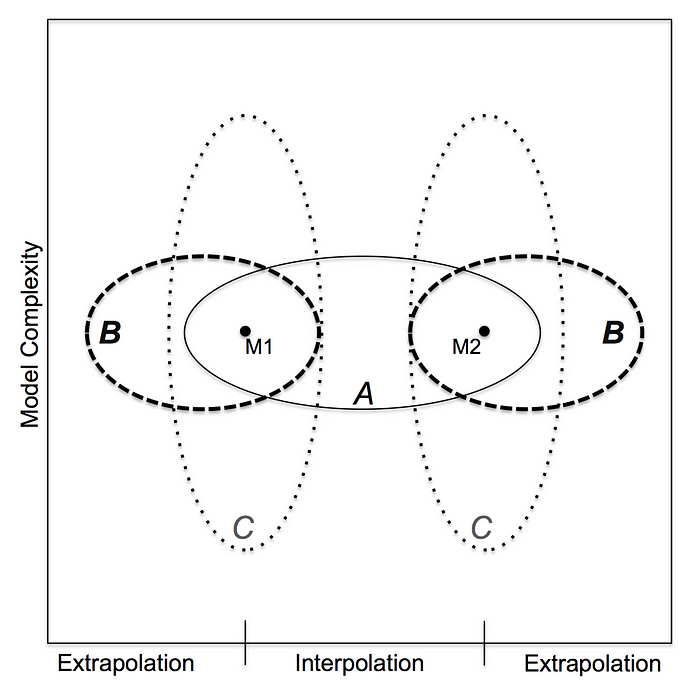

Consider the figure above. The box represents the space of all possible generative models for the type of artifact that we wish to generate. M1 and M2 represent models trained on different examples (e.g., a GAN trained to generate images of horses and a GAN trained to generate images of birds). All other points in space are hypothetical models representing training sets that do not exist. Each of the three combinational operators can only reach certain portions of the space of possible models:

- A: Amalgamation can generally be thought of as interpolating between two models.

- B: Concept blending can generally be thought of as expanding/extrapolating on a model by adding concepts from another model.

- C: Compositional adaptation can generally be thought of as increasing or decreasing model complexity by taking concepts from other models.

By chaining operations together, combinational meta search can reach unique parts of the space, such as the upper middle section of the figure by combining amalgamation and compositional adaption (e.g. a GAN that can generate images of Pegasi).

Combinational meta search can be implemented in the form of any number of optimization search algorithms including, but not limited to, blind hill-climbing, simulated annealing, genetic algorithm, or reinforcement learning.

Combinational meta search may be provided with more than two initial models. However, each combinational operator will only combine two models at a time. Notably, concept blending, amalgams, and compositional adaptation all require that components of the individual models must be comparable and a mapping between models must be computable.

The objective function for combinational meta search is the sum of three creativity measures originally proposed by Boden: novelty, surprise, and value. Novelty and surprise can be measured by directly comparing generative model structures. Novelty is a measure of the difference between a new model and the two input models. Surprise is a function of the history of previously generated things, and a divergence from this history. Value, on the other hand, requires the generated output of the models to be measured, and requires domain-specific heuristics.

Examples

Level Generation in Super Mario Bros.

As an illustration of the potential for this, and to give a deeper understand- ing of these techniques we ran a case study applying combinational creativity techniques to machine-learned models of Super Mario Bros. levels. We build on initial work on modeling and generating game levels using probabilistic graphical models. Here is an example of a Super Mario Bros. level generated using probabilistic graphical models to mimic level 1–1. The take-away is that the levels look (and evaluate with human subject studies) to be plausible alternatives to real Super Mario Bros. levels.

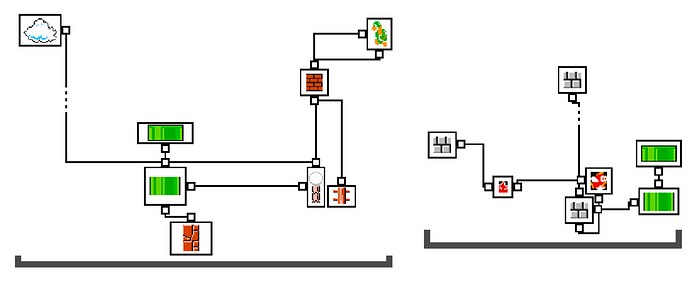

Below, we visualize the graphical models for two types of Super Mario Levels. For simplicity of visualization, we only show the most probable relationships between different types of sprites.

The graphical nature of probabilistic graphical models make the technique amenable to combinational meta search because a generative model is already in graphical form and it is relatively straightforward to map elements of one graph to another. (Note that we do have to provide a mapping function for combinational meta search to work. In this case, our mapping function first simplifies the structure of the probabilistic graphical models).

The following figure shows different generative models found by our combinational meta search technique. We generated each model using a one-step search with each of the combinational operators. The figure shows the graphical model and a representative screenshot of what the model can generate. We also show a randomly generated model for comparison.

The expansion operator wasn’t discussed above, but is basically a generalization of the other three, provably capable of generating the same models as blending, alamgamation, and compositional adaption plus more.

We use playability — in this case, the ability of a A* game playing agent to complete generated levels — as the domain-specific value metric.

One-Shot Learning with Neural Networks

Neural networks are also graphs. Therefore, we should be able to apply combinational meta search to find new models that are not learnable because of the lack of data but are combinations of other, learnable models.

Image recognition is one of the most common, and successful applications of deep convolutional neural networks. One of the reasons for the success is the relative availability of image data, which have been compiled into training sets. The CIFAR-10 dataset has tens of thousands of images sorted into 10 classes, including cats and dogs, but not foxes. The CIFAR-100 dataset has tens of thousands of images sorted into 100 classes, including cats, dogs, and foxes.

Suppose you wanted to learn an image recognizer for foxes, but you only had a handful of images — not enough to train a classifier. Further suppose you had a neural network trained to recognized classes of object in the CIFAR-10 dataset. This is a contrived example but is representative of the real-world problem of a new class of object coming into existence and needing to be recognized with few or no images available. As predictable, our network is confused by the fox images. We get some activation indicating it might be an airplane or a deer, or any number of other classes.

Under the intuition that foxes share many features of cats and dogs (biologists are barfing at the moment), combinational meta search attempts to produce new classifiers by re-combining the filters in the CIFAR-10 trained network in different ways. Deep convolutional neural networks are more complicated graphical structures than the above probabilistic graphical models, but they are still graphical structures. Indeed, each convolution can be thought of as a sub-graph that filters on a particular feature. Combinatorial meta search pseudo-randomly mixes and matches different parts of different filters until the network starts to achieve the best possible accuracy on the new class — foxes.

The fox experiment shows that when we have fewer than 100 images recombined neural networks can recognize foxes better than if we were to retrain the original neural network with the new fox data (below). H1 through H3 show results under different value functions (optimize for the accuracy across all classes, for fox classification accuracy only, or a normalized combination of the first two value functions).

How about Pegasi? With 10 photorealistic images of Pegasi, we were able to achieve up to 80% recognition in some experiments.

For more information on recombined neural network and the experiments above see our arXiv report.

Conclusions

Combinational meta search brings the theories of combinatorial creativity to machine learning by directly manipulating the graphical models learned from data. The technique is general, working on both probabilistic graphical models and neural networks.

Our work attempts to bridge the gap between human creativity and computer creativity by giving intelligent systems some ability to exert intentional control over the creative process. This is a small step, as combinatorial meta search is still guided by human-provided value functions. The meta search is, at this stage, simplistic, using a pseudo-random search. More sophisticated optimization search techniques — genetic algorithms, reinforcement learning, even neural networks that operate on neural nets — should work better.

Combinational meta search is a step toward bringing machine learning based computational creativity from stage 1 mimicry to stage 2 exploration.

Read More:

This article draws material from the following research papers and reports:

- Matthew Guzdial and Mark O. Riedl. Game Level Generation from Gameplay Videos. Proceedings of the 2016 AAAI Conference on Artificial Intelligence for Interactive Digital Entertainment.

- Matthew Guzdial and Mark O. Riedl. Learning to Blend Computer Game Levels. Proceedings of the 7th International Conference on Computational Creativity.

- Matthew Guzdial and Mark O. Riedl. Combinatorial Meta Search. Proceedings of the NIPS 2017 Workshop on Machine Learning for Creativity and Design.

- Matthew Guzdial and Mark O. Riedl. Combinatorial Creativity for Procedural Content Generation via Machine Learning. Proceedings of the AAA 2018 Workshop on Knowledge Extraction in Games.

- Matthew Guzdial and Mark O. Riedl. Combinets: Learning New Classifiers via Recombination. arXiv:1802.03605.